Первый слайд презентации: How to train face recognition models on millions of persons?

Evgeny Smirnov, Senior Researcher of S peech Technology Center

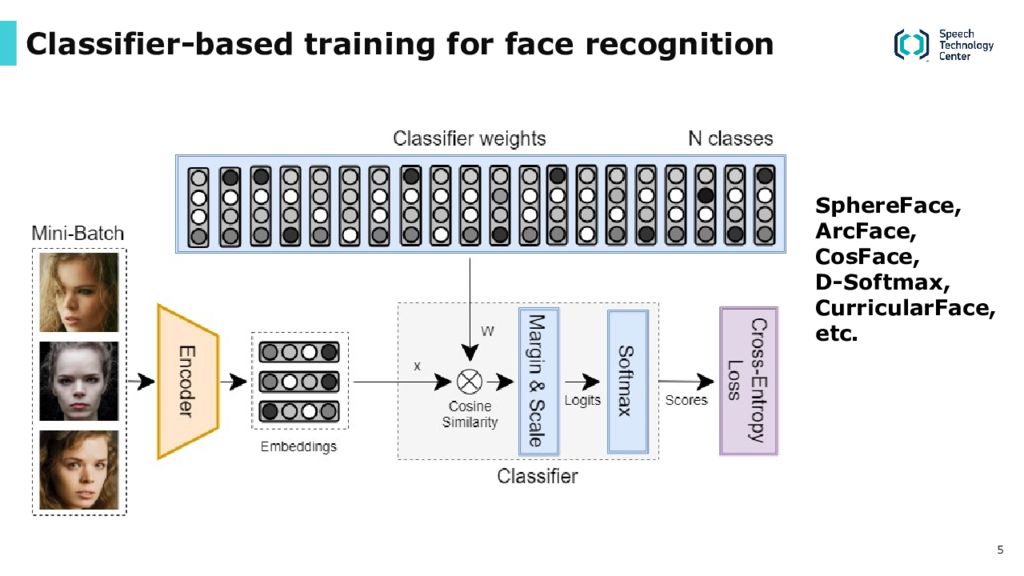

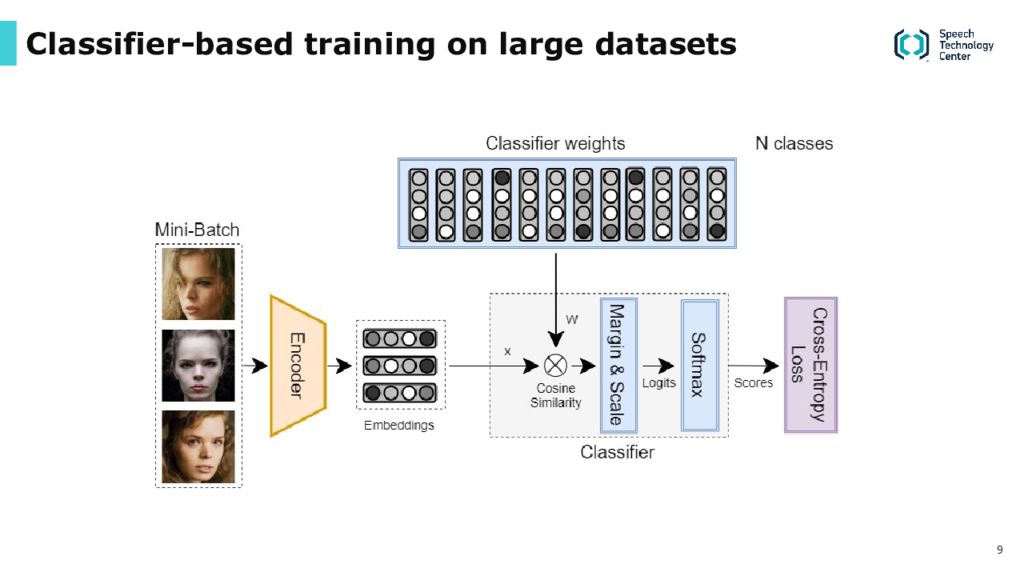

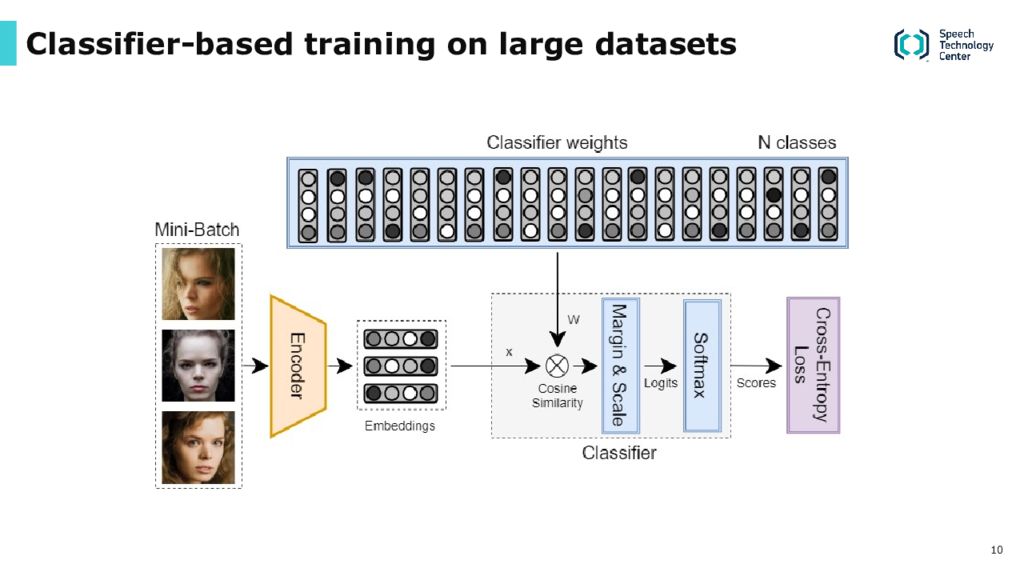

Слайд 5: Classifier-based training for face recognition

SphereFace, ArcFace, CosFace, D- Softmax, CurricularFace, etc.

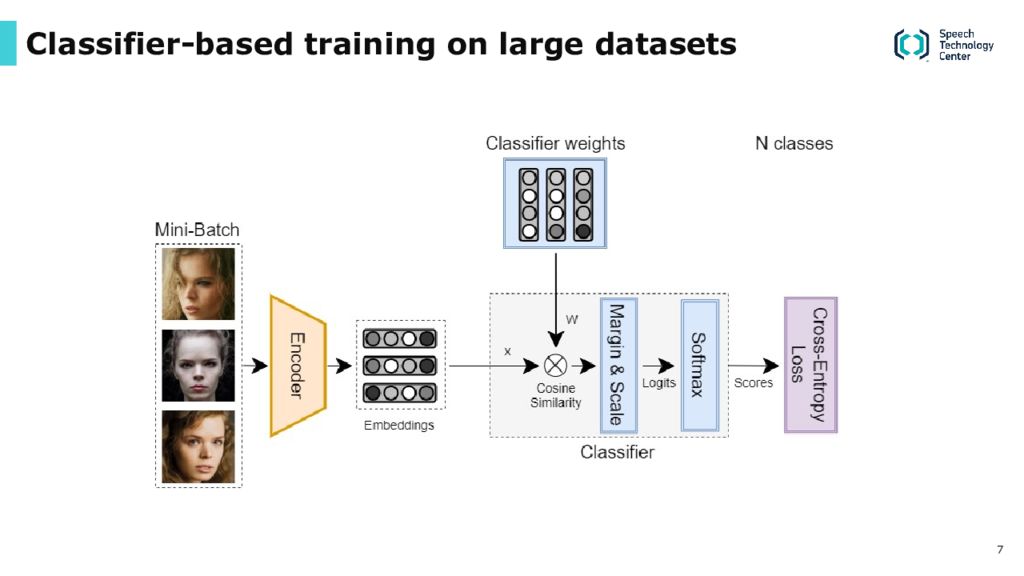

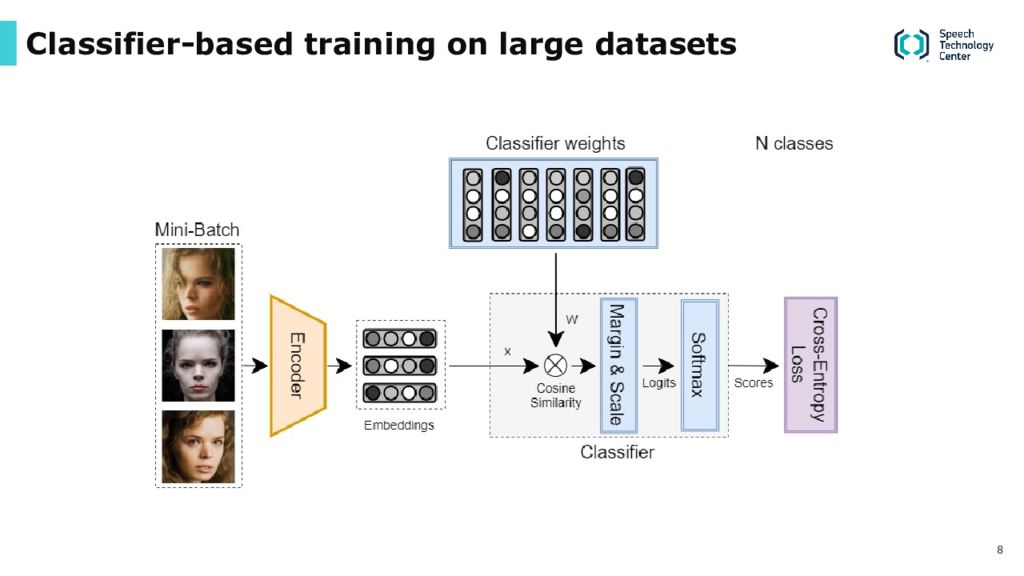

Слайд 6: Classifier-based training on large datasets

Problem : Linear increase of memory and computation consumption with an increasing number of classes Classifier weights for millions of classes do not fit in ordinal GPU memory, and the loss function computation time is too large for practical use.

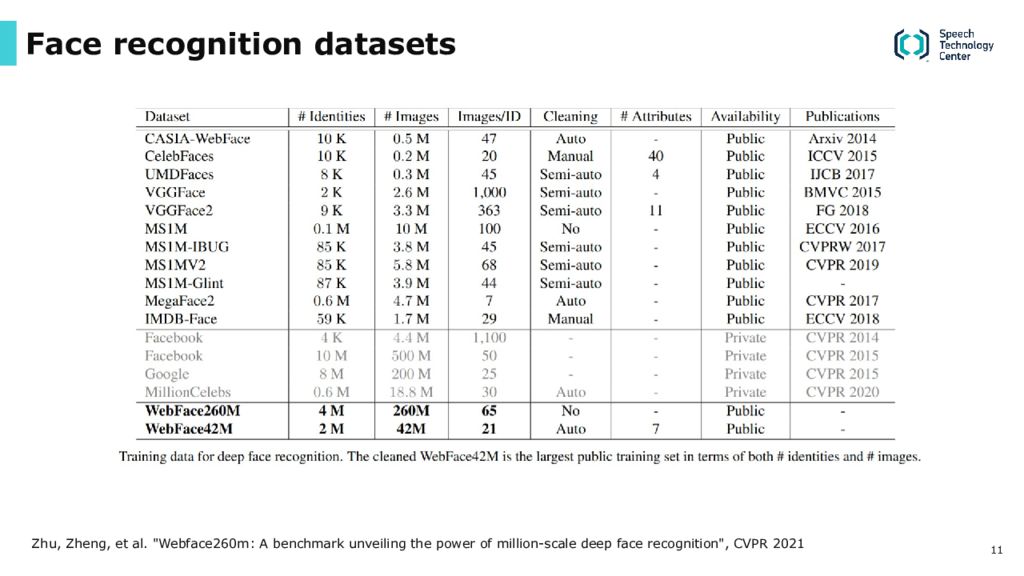

Слайд 11: Face recognition datasets

Zhu, Zheng, et al. "Webface260m: A benchmark unveiling the power of million-scale deep face recognition", CVPR 2021

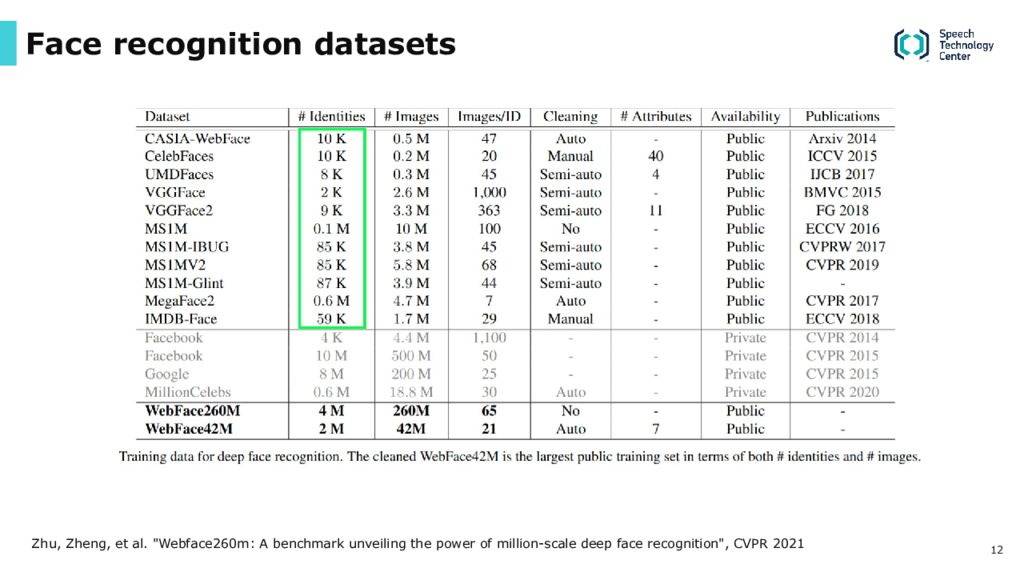

Слайд 12: Face recognition datasets

Zhu, Zheng, et al. "Webface260m: A benchmark unveiling the power of million-scale deep face recognition", CVPR 2021

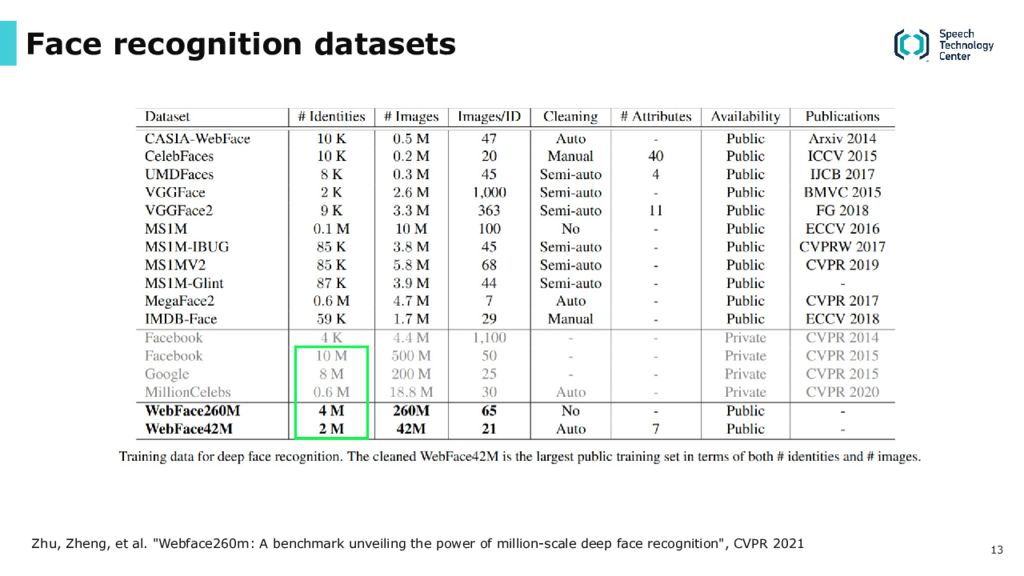

Слайд 13: Face recognition datasets

Zhu, Zheng, et al. "Webface260m: A benchmark unveiling the power of million-scale deep face recognition", CVPR 2021

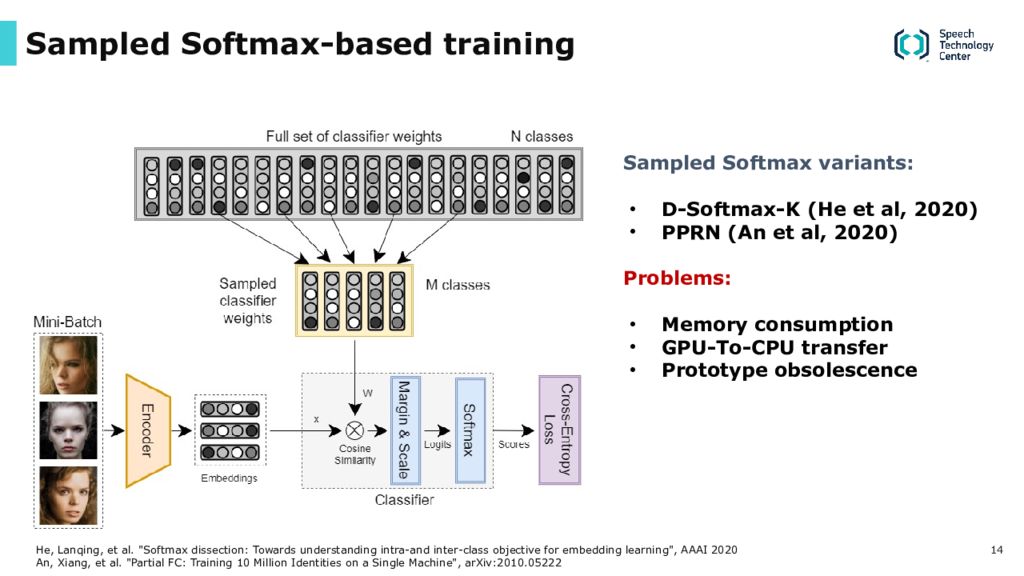

Слайд 14: Sampled Softmax -based training

Sampled Softmax variants : D- Softmax -K ( He et al, 2020) PPRN ( An et al, 2020) Problems : Memory consumption GPU- To -CPU transfer Prototype obsolescence He, Lanqing, et al. " Softmax dissection : Towards understanding intra-and inter-class objective for embedding learning ", AAAI 2020 An, Xiang, et al. " Partial FC: Training 10 Million Identities on a Single Machine ", arXiv:2010.05222

Слайд 15: Memory consumption and GPU-to-CPU data transfer

GPU memory is fixed, but we still need to keep classifier weights ( prototypes ) for all classes in the dataset in the ( non -GPU) memory. We also need to transfer sampled classifier weights to GPU and back at each training iteration.

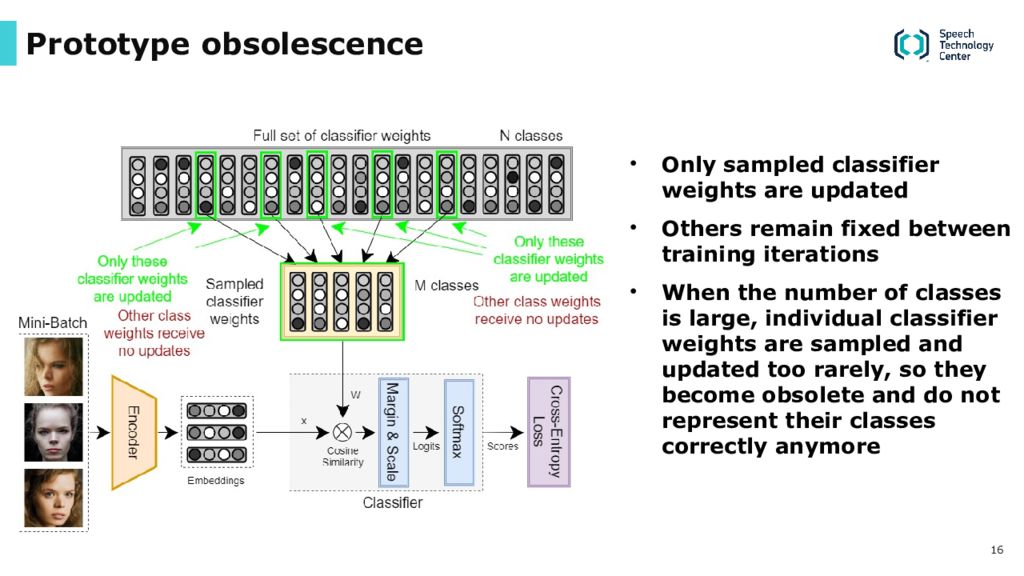

Слайд 16: Prototype obsolescence

Only sampled classifier weights are updated Others remain fixed between training iterations When the number of classes is large, individual classifier weights are sampled and updated too rarely, so they become obsolete and do not represent their classes correctly anymore

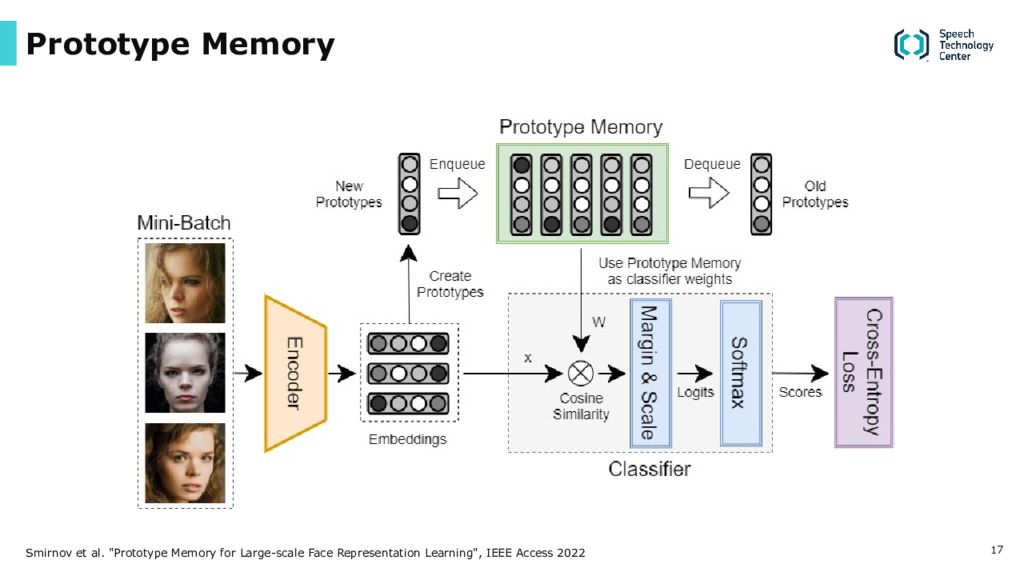

Слайд 17: Prototype Memory

Smirnov et al. " Prototype Memory for Large-scale Face Representation Learning ", IEEE Access 2022

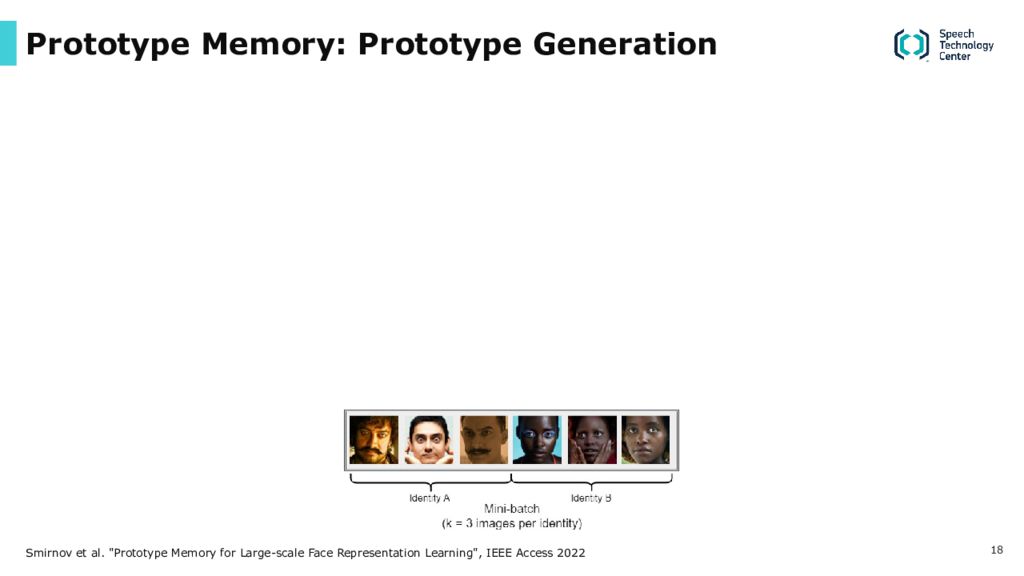

Слайд 18: Prototype Memory: Prototype Generation

Smirnov et al. " Prototype Memory for Large-scale Face Representation Learning ", IEEE Access 2022

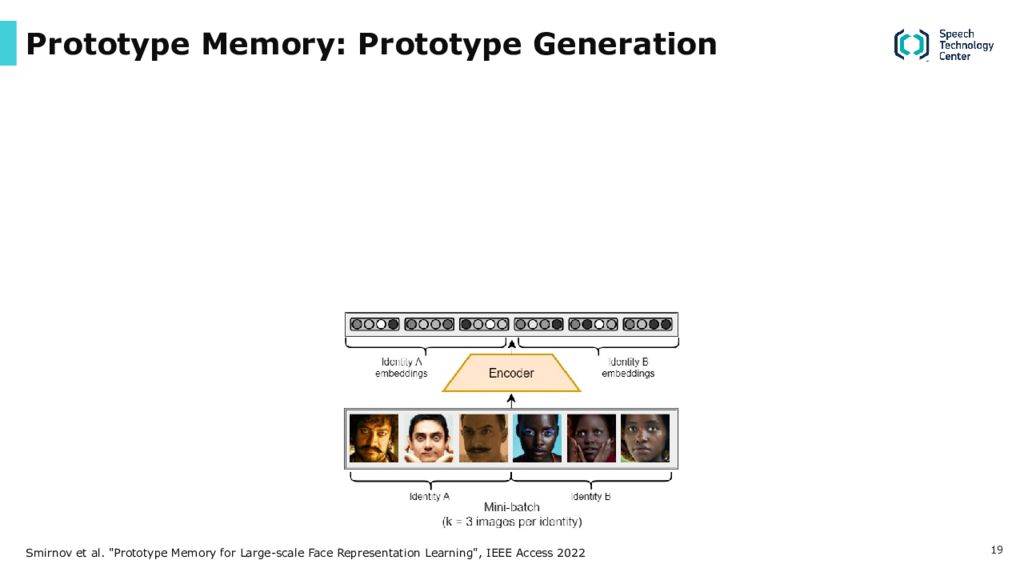

Слайд 19: Prototype Memory: Prototype Generation

Smirnov et al. " Prototype Memory for Large-scale Face Representation Learning ", IEEE Access 2022

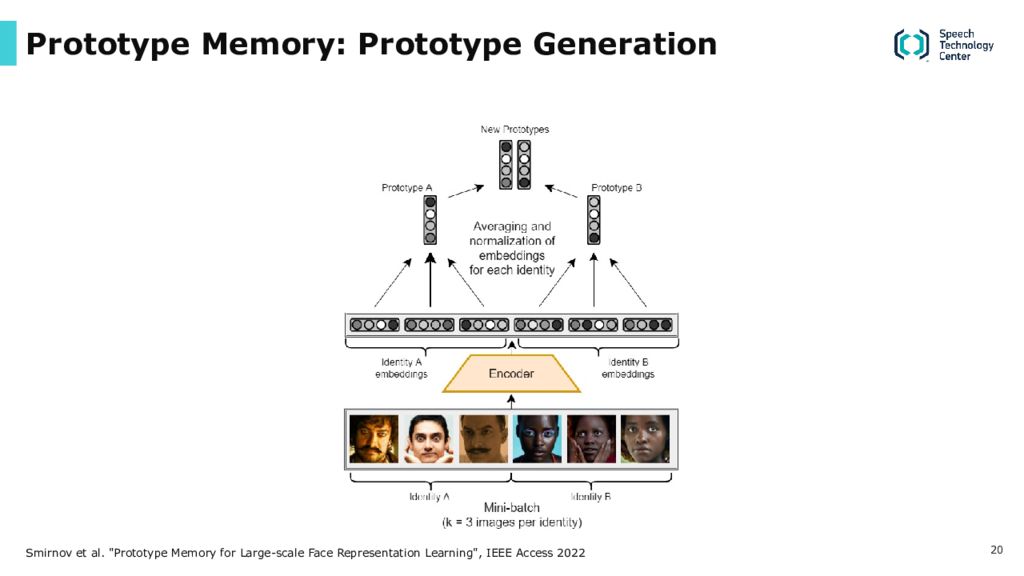

Слайд 20: Prototype Memory: Prototype Generation

Smirnov et al. " Prototype Memory for Large-scale Face Representation Learning ", IEEE Access 2022

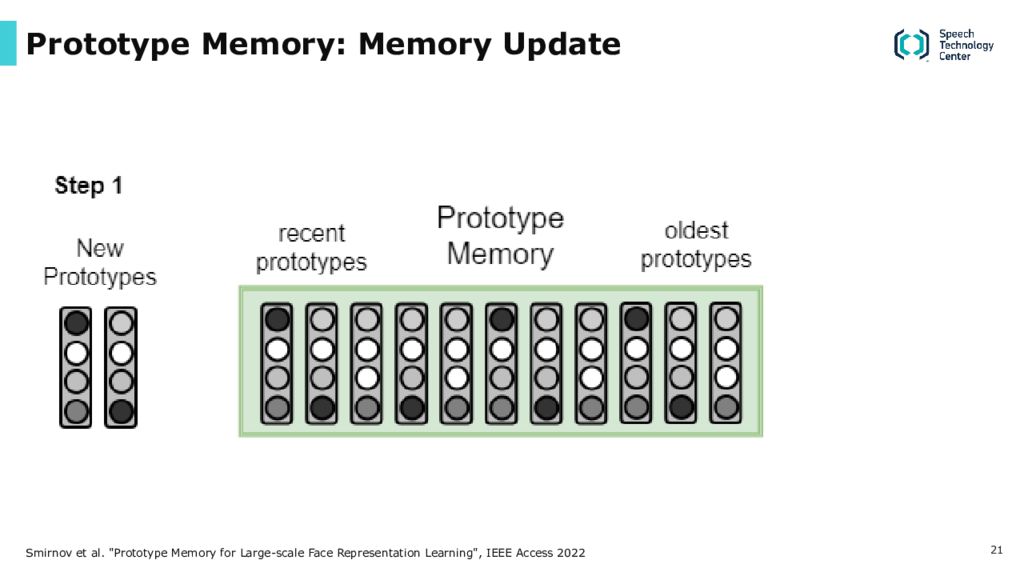

Слайд 21: Prototype Memory: Memory Update

Smirnov et al. " Prototype Memory for Large-scale Face Representation Learning ", IEEE Access 2022

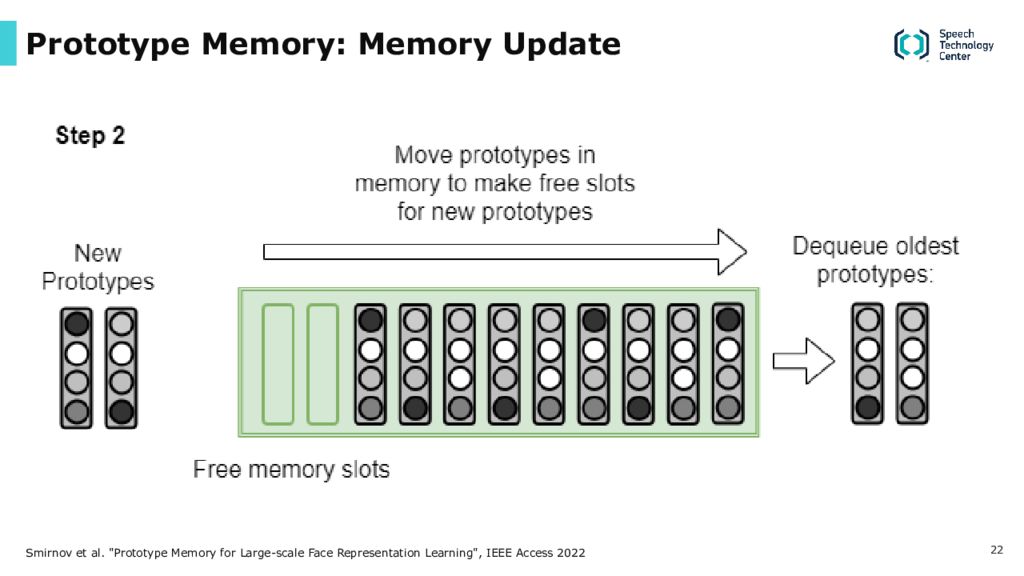

Слайд 22: Prototype Memory: Memory Update

Smirnov et al. " Prototype Memory for Large-scale Face Representation Learning ", IEEE Access 2022

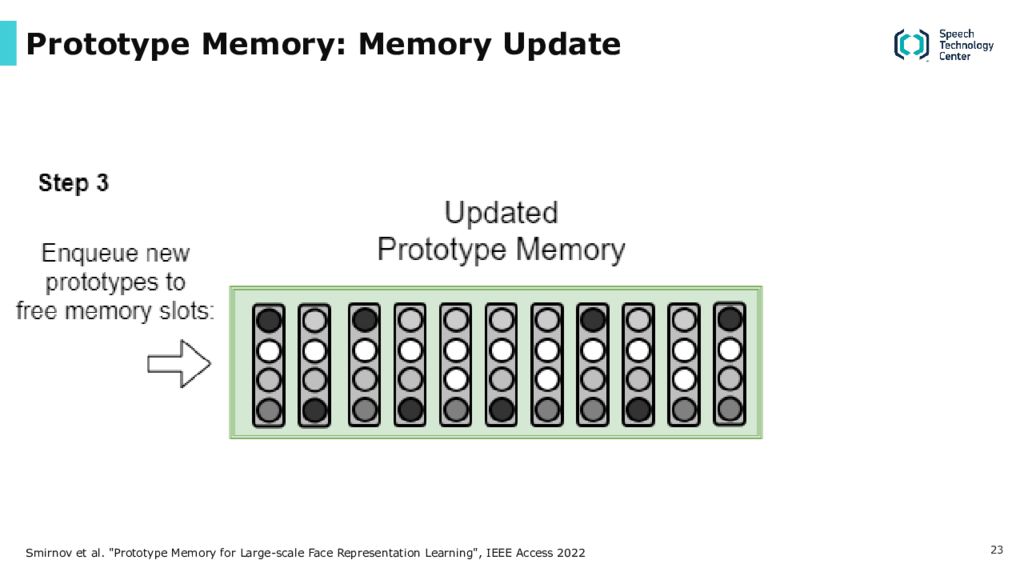

Слайд 23: Prototype Memory: Memory Update

Smirnov et al. " Prototype Memory for Large-scale Face Representation Learning ", IEEE Access 2022

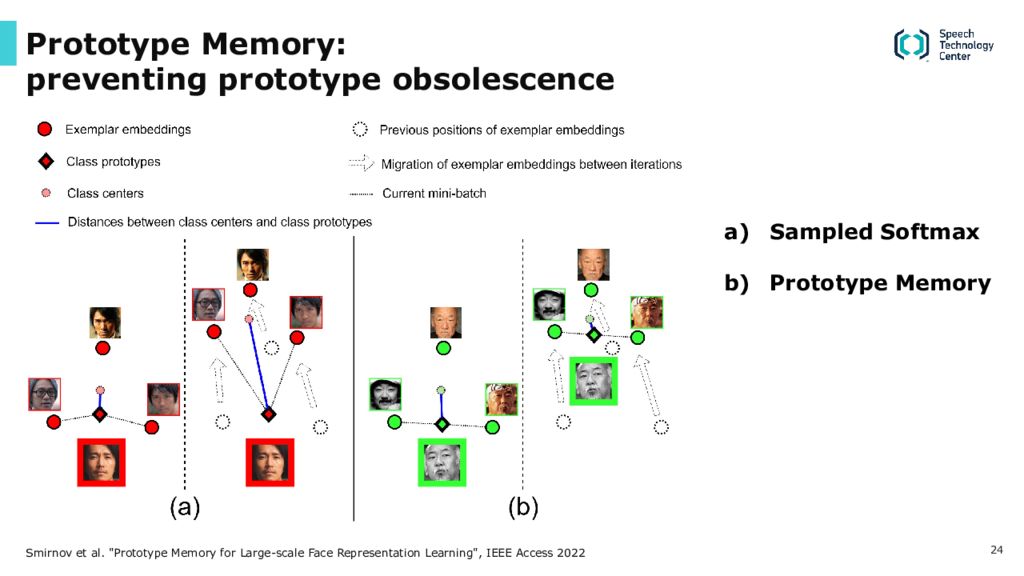

Слайд 24: Prototype Memory: preventing prototype obsolescence

Sampled Softmax Prototype Memory Smirnov et al. " Prototype Memory for Large-scale Face Representation Learning ", IEEE Access 2022

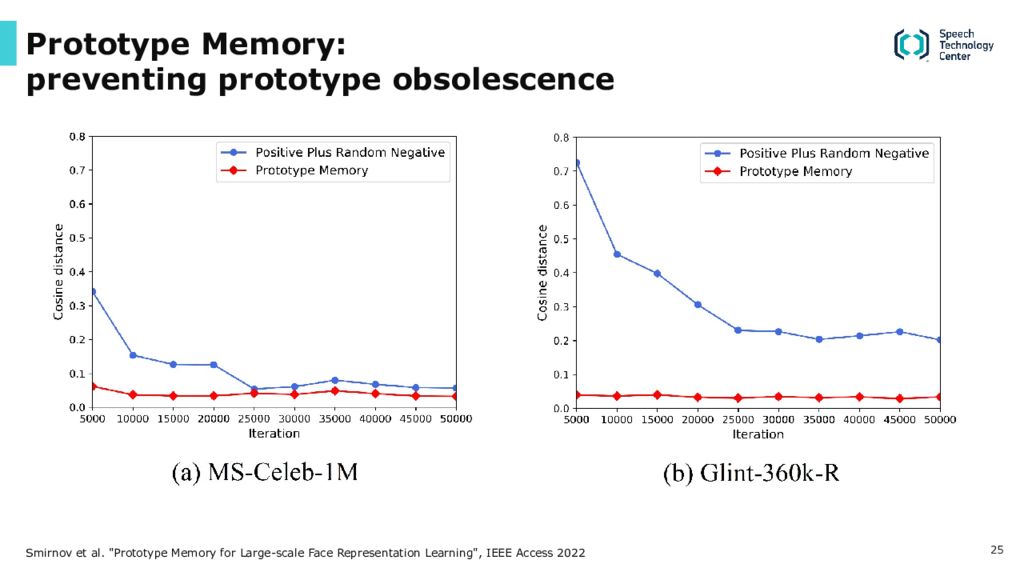

Слайд 25: Prototype Memory: preventing prototype obsolescence

Smirnov et al. " Prototype Memory for Large-scale Face Representation Learning ", IEEE Access 2022

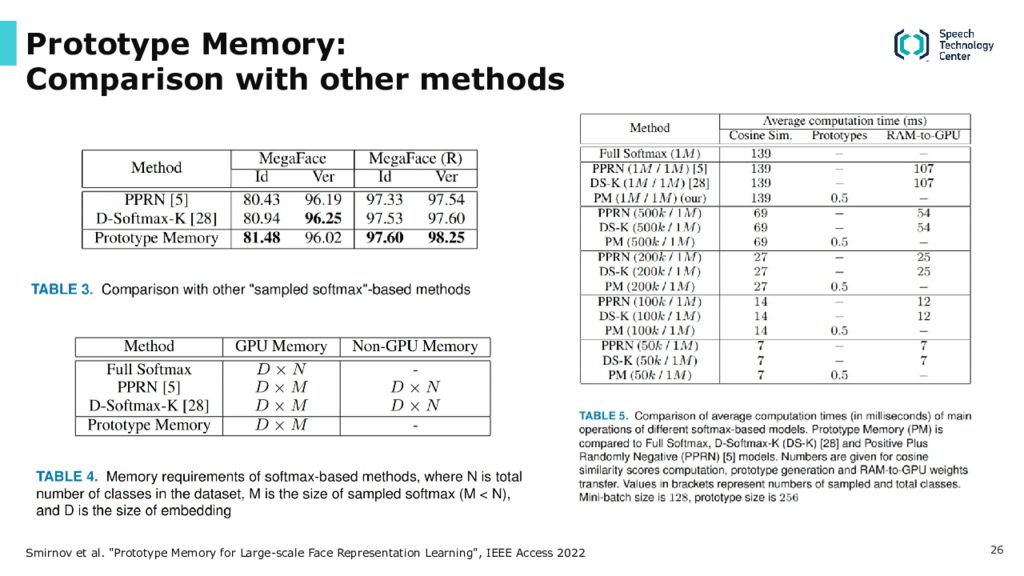

Слайд 26: Prototype Memory: Comparison with other methods

Smirnov et al. " Prototype Memory for Large-scale Face Representation Learning ", IEEE Access 2022

Слайд 27: Conclusions

Prototype Memory is a novel method for training face recognition models on large datasets. It is fast, memory-efficient, and more accurate than other similar methods. It is useful for preventing the problem of “ prototype obsolescence ”, which emerges in large-scale datasets.

Последний слайд презентации: How to train face recognition models on millions of persons?: THANK YOU FOR ATTENTION

Moscow 59/2 Zemlyanoy Val St. 109004 +7 495 669 7440 stc-int@speechpro.com St. Petersburg Vyborgskaya Embankment 45, Bldg. E 194044 +7 812 325 8848 stc-int@speechpro.com Evgeny Smirnov, Senior R esearcher of Speech Technology Center e- mail : smirnov-e@speechpro.com